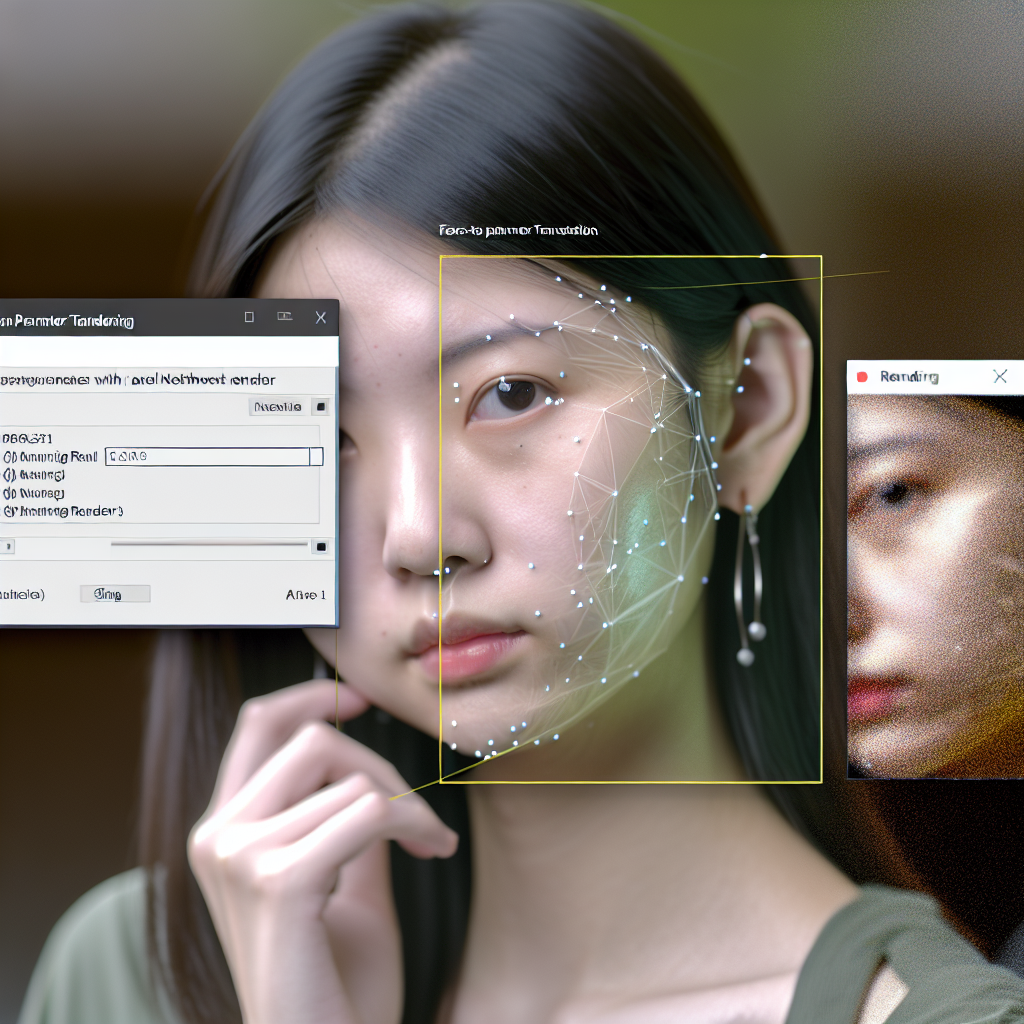

Face-to-Parameter translation using neural network renderers represents a groundbreaking approach in computer vision and graphics, enabling highly accurate and expressive face synthesis and manipulation. This technology leverages deep learning models to translate facial images directly into underlying parameters, facilitating realistic avatar creation, animations, and personalized virtual experiences. In this article, we’ll explore how neural network renderers revolutionize face-to-parameter translation and their practical applications.

Understanding Face-to-Parameter Translation with Neural Network Renderers

Traditional facial modeling and animation techniques often rely on manual annotation or predefined models, which can be labor-intensive and lack flexibility. The advent of neural network renderers has enabled a shift towards automated, data-driven translation of facial images into meaningful parameters. These parameters typically include expressions, head pose, lighting conditions, and other attributes that define a person’s face in a 3D space.

At the core of neural network-based face-to-parameter translation is the ability of deep learning models, particularly convolutional neural networks (CNNs) and encoder-decoder architectures, to learn complex mappings from raw images to a compact set of parameters. This process involves training on extensive datasets comprising paired facial images and their corresponding parameter annotations, allowing the model to effectively capture the nuances of facial variations and expressions.

From Pixels to Parameters: The Workflow and Applications

The process typically involves three key stages:

- Image Acquisition: High-quality facial images are gathered, which can be captured in real-time or from datasets. Ensuring diverse data enhances the model’s ability to generalize across different faces, lighting conditions, and expressions.

- Neural Network Processing: The images are fed into a trained neural network that encodes visual features and predicts the underlying parameters. These parameters may include facial landmarks, pose angles, shape coefficients, and expression vectors.

- Parameter Utilization: The extracted parameters are used to generate 3D face models, animate virtual avatars, or perform face editing tasks. Modern neural renderers can also synthesize new face images based on manipulated parameters, enabling applications like deepfakes, digital avatars, and personalized content creation.

This automation streamlines workflows in various fields, such as entertainment, gaming, virtual reality, and teleconferencing, by providing fast and accurate face modeling. Moreover, advances in neural network architectures, like differentiable renderers, allow for joint optimization of face parameters and rendering outputs, leading to even more realistic results.

Conclusion

Face-to-parameter translation via neural network renderers offers a powerful and scalable solution for generating realistic facial models and animations. By converting 2D images into precise 3D parameters, this technology enhances applications ranging from virtual avatars to augmented reality. As neural networks continue to evolve, we can expect even more sophisticated, real-time facial synthesis that will transform digital interactions and content creation.